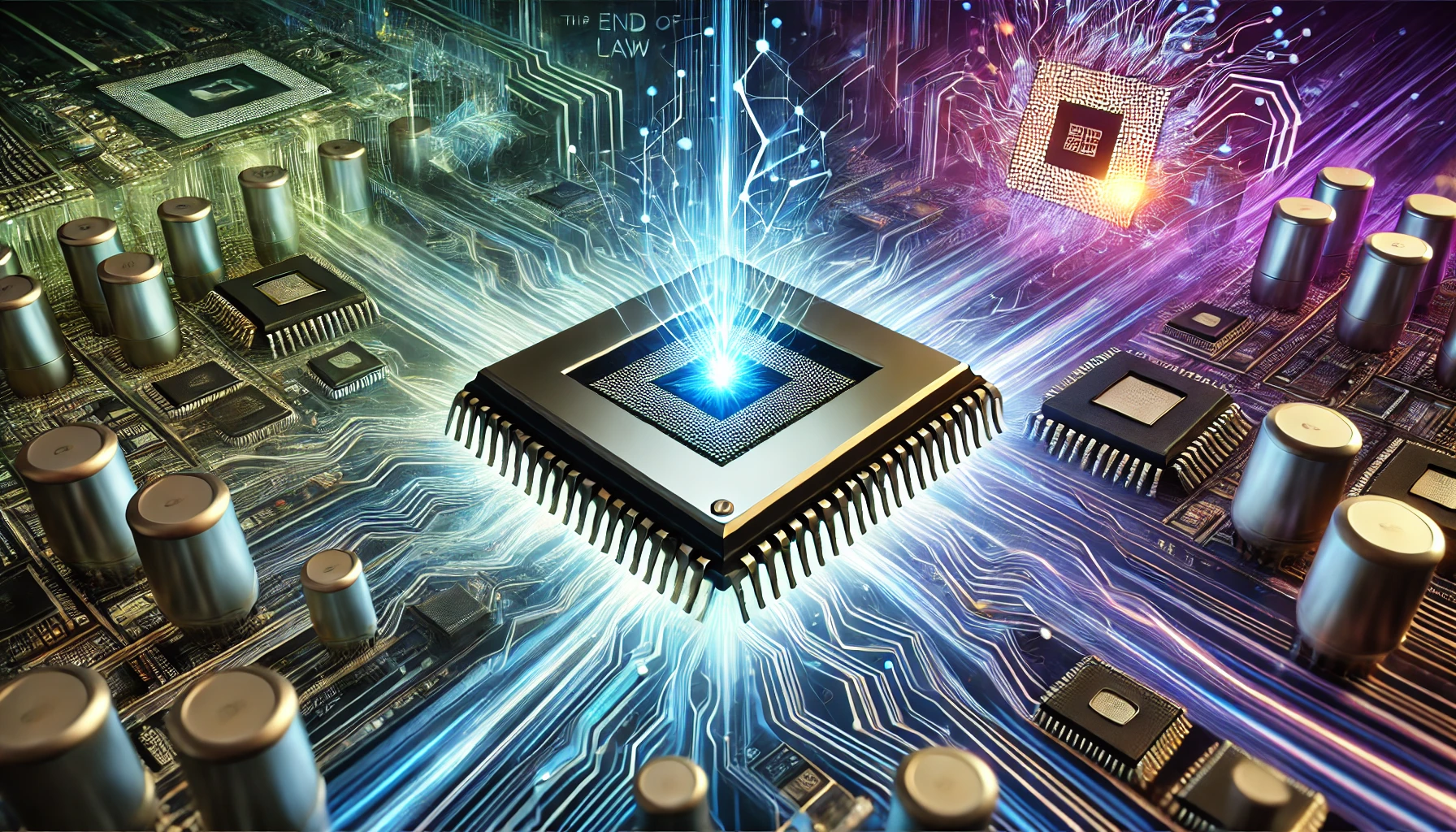

For over five decades, Moore’s Law has been the north star guiding the semiconductor industry. This simple yet profound observation, made by Intel co-founder Gordon Moore in 1965, predicted that the number of transistors on a microchip would double approximately every two years. This relentless pace of innovation not only transformed the tech industry but also fueled the exponential growth of computing power, enabling everything from the smartphone revolution to AI breakthroughs. But today, the cracks in Moore’s Law are becoming impossible to ignore. So, what happens now?

Let’s dive into what the death of Moore’s Law really means, the challenges it presents, and the exciting technologies that are stepping up to fill the void.

Why Is Moore’s Law Fading?

At its core, Moore’s Law relied on shrinking transistors. Smaller transistors mean more can fit onto a chip, leading to faster processing speeds, lower energy consumption, and lower costs. But we’re reaching the physical limits of miniaturization. Transistors are now so small that they’re approaching the size of atoms. This creates a host of problems, such as quantum tunneling, where electrons pass through barriers they’re not supposed to, causing leaks and inefficiencies.

Manufacturing at such microscopic scales is also becoming prohibitively expensive. The latest semiconductor fabrication plants (fabs) cost tens of billions of dollars to build and operate. Not to mention, the complexity of designing chips at these scales has skyrocketed, requiring cutting-edge tools, AI-driven design techniques, and years of development.

In short, the exponential progress we’ve enjoyed for decades is slowing down. Chips are still improving, but the pace is no longer doubling every two years. This has significant implications for technology, economics, and society at large.

The Consequences of Moore’s Law Ending

The slowdown of Moore’s Law forces us to rethink the way we approach computing. For decades, developers relied on increasing hardware performance to solve software inefficiencies. If your program ran slow, just wait a year or two for faster hardware to fix the problem. This “software bloat” was sustainable in the Moore’s Law era but is now coming back to bite us.

Moreover, industries that depend on high-performance computing—such as AI, scientific research, and gaming—face new challenges. Training state-of-the-art AI models already requires immense computational power, and the demand is only growing. Without the steady performance gains from Moore’s Law, innovation risks hitting a wall.

But it’s not all doom and gloom. In fact, the end of Moore’s Law is sparking a wave of creativity and innovation as researchers and engineers explore new ways to push the boundaries of what’s possible.

What Comes Next?

1. Specialized Hardware

One of the most promising trends is the rise of specialized hardware. Instead of relying on general-purpose CPUs, we’re seeing a shift towards chips designed for specific tasks. Graphics Processing Units (GPUs), for example, have become indispensable for AI and gaming, thanks to their ability to handle parallel computations efficiently.

More recently, we’ve seen the emergence of Tensor Processing Units (TPUs) from Google, designed specifically for machine learning workloads. Similarly, application-specific integrated circuits (ASICs) and field-programmable gate arrays (FPGAs) are being used for tasks ranging from cryptocurrency mining to video encoding. These specialized chips offer significant performance and energy efficiency gains by tailoring hardware to specific use cases.

2. Chiplet Architecture

Traditional monolithic chip designs are giving way to chiplet-based architectures. Instead of building a single large chip, manufacturers create smaller “chiplets” that can be interconnected. This approach improves yields, reduces costs, and allows for greater flexibility in design.

AMD’s Ryzen processors and Apple’s M1 and M2 series are prime examples of chiplet architectures delivering impressive performance and efficiency. By breaking the chip into modular components, engineers can mix and match technologies, optimize performance, and even integrate components from different fabs.

3. Beyond Silicon

Silicon has been the backbone of semiconductors for decades, but it’s not the only game in town. Researchers are exploring alternative materials like graphene, gallium nitride (GaN), and transition metal dichalcogenides (TMDs). These materials offer properties like higher electron mobility, better thermal conductivity, and the potential for smaller, faster, and more energy-efficient transistors.

While still in the experimental stage, these materials could revolutionize the semiconductor industry if they can be mass-produced economically.

4. Quantum Computing

Quantum computing is perhaps the most futuristic alternative to traditional computing. By leveraging the principles of quantum mechanics, quantum computers can solve certain types of problems exponentially faster than classical computers. While still in its infancy, the field is advancing rapidly, with companies like IBM, Google, and startups like Rigetti making significant strides.

That said, quantum computing isn’t a direct replacement for traditional computing. Instead, it’s likely to complement classical systems, excelling at specific tasks like optimization, cryptography, and molecular simulation.

5. Neuromorphic Computing

Inspired by the human brain, neuromorphic computing aims to mimic neural networks in hardware. These systems are designed to process information in a way that’s more analogous to how our brains work, making them highly efficient for tasks like pattern recognition and machine learning.

Companies like Intel (with its Loihi chip) are leading the charge in this space, experimenting with chips that use spiking neural networks to perform computations with remarkable energy efficiency.

6. Software Optimization

As hardware improvements slow, software optimization becomes more critical than ever. Techniques like algorithmic efficiency, better resource management, and parallel processing can squeeze more performance out of existing hardware.

The tech industry is also leaning heavily on AI and machine learning to optimize software. Compilers, for example, can use AI to generate highly optimized machine code tailored to specific hardware configurations.

Embracing the Post-Moore Era

The death of Moore’s Law marks the end of an era, but it’s also the beginning of a new chapter in computing. The challenges we face are spurring innovation across multiple fronts, from hardware to software, materials science to quantum mechanics. These advancements promise not only to sustain progress but also to reshape the landscape of technology in ways we can’t yet fully imagine.

For tech enthusiasts, engineers, and researchers, this is an exciting time. The slowing of Moore’s Law is not the end of innovation but a call to rethink, reinvent, and redefine what’s possible. The road ahead may be less predictable, but that’s part of the fun. After all, if there’s one thing we’ve learned from Moore’s Law, it’s that technology has a way of surprising us—often in ways we least expect.

So, buckle up. The post-Moore era is here, and it’s going to be a wild ride.

Share this article

You might also like

Hello, World!

The Journey of Computing and the Birth of "Hello, World!" Computers are everywhere, yet their roots lie in fascinatingly humble beginnings.

Read MoreThe Unsung Heroes of Tech: Open Source Communities

Open Source: A Quick Refresher First things first, what exactly is open source? Simply put, it’s software with source code that’s open for anyone to

Read MoreWhy Your Code Should Be Beautiful: The Art of Clean Code

When was the last time you looked at a piece of code and thought, Wow, that’s beautiful!? Chances are, not recently. Many developers prioritize

Read MoreThe Eternal Struggle: Dark Mode vs. Light Mode

Once upon a time, back in the ancient days of computing, screens were mostly black with green or amber text. This wasn’t a “dark mode”—it was the

Read MoreWhen Machines Dream: The Art and Science of AI-Generated Creativity

In recent years, artificial intelligence has made a dramatic leap from number crunching and algorithmic problem-solving to creating something many of

Read MoreThe Hidden World of APIs: How the Internet Talks

The internet is a vast, interconnected world. It's a place where cat videos, TikTok dances, and memes coexist alongside groundbreaking medical

Read MoreWill AI Replace Developers? The Future of Coding

Ah, the question that haunts late-night programming forums and inspires spirited debates over coffee: Will AI replace developers? Before you start

Read More

.jpg)